Building a Reminder Agent That Actually Remembers

From a friendly Slack assistant to a memory-driven AI system you can build yourself

Most reminder bots work in the same shallow way.

They accept a command, schedule a job, send a notification, and move on. They don’t learn how you think about time. They don’t adapt to how you respond to reminders. And once a reminder is completed, it vanishes completely, as if it never existed.

That approach is fine if you’re building alarms. It fails quietly when you’re building assistants.

A real reminder assistant is not just a scheduler. It’s a long-lived system that has to balance reliability with personalization. It must never forget an active reminder, never re-trigger a completed one, and yet still learn patterns like “this user usually snoozes by 10–15 minutes” or “work reminders tend to land in the morning.”

At Mem0, we built MeowMem0, a Slack reminder agent, to explore what happens when memory is treated as a first-class architectural concern rather than an afterthought. The result is not just a better reminder experience, but a reusable pattern for building AI agents that can remember without hallucinating, adapt without drifting, and archive without forgetting.

This post tells that story in two parts.

We’ll start from a product perspective, what MeowMemo feels like to use and why it behaves differently from typical bots. Then we’ll switch gears into a deep engineering section that walks through the system design in enough detail that you could realistically build something similar yourself.

Part I: The product story, why reminders expose the memory problem

On the surface, MeowMem0 behaves exactly like you’d expect a modern Slack assistant to behave.

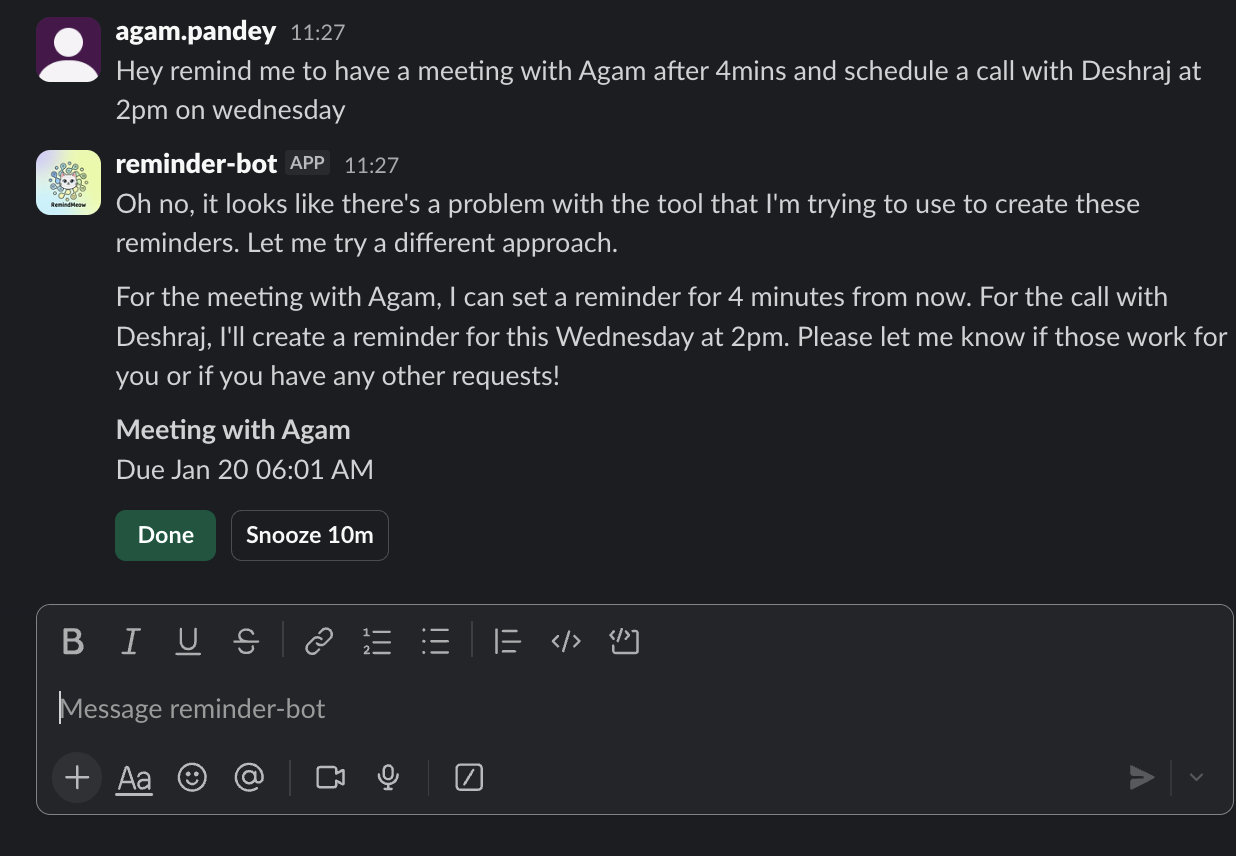

You can message it naturally:

“Remind me to pay rent tomorrow.”

“Push that to 6pm.”

“Snooze this by 10 minutes.”

“What’s coming up this week?”

When a reminder is due, it doesn’t just fire a notification. It pings you directly in Slack with context, buttons, and the ability to respond inline. You can mark it done, snooze it, or ask for details without breaking the conversational flow.

So far, this sounds like a lot of bots.

The difference shows up over time.

If you often confirm work reminders around 10am, MeowMemo starts suggesting that time when you forget to specify one. If you regularly snooze by roughly 15 minutes, the agent learns that rhythm and reflects it back to you. And when a reminder is completed, it stops influencing future behavior, but it doesn’t disappear from your history.

This last point matters more than it sounds.

Most AI systems either forget too aggressively or remember too loosely. Forgetting loses valuable signal. Loose memory creates contradictions. MeowMem0 avoids both by separating two ideas that are often conflated:

What is true vs. what is remembered

That separation is the foundation of the entire system.

Truth vs. memory: the design principle that changes everything

In MeowMem0, reminders themselves are not “memories.”

They are facts.

If a reminder exists, it must fire. If it is marked done, it must never fire again. That invariant lives in a system-of-record database and is injected into the system prompt on every turn. The model sees the real reminder state, not a recollection of it.

All of that lives in a traditional system-of-record database.

Memory, on the other hand, is used for something very different: behavior, preferences, and context. Memory answers questions like:

How does this user usually phrase reminders?

What time patterns do they tend to confirm?

How often do they snooze?

What categories do they implicitly use?

Mem0 is used for this second class of information, plus a mirrored memory trail of reminders for recall and continuity. We write active and archived reminders into Mem0 categories, but the model is never allowed to treat those as truth. The DB is the truth. Mem0 is the personalization layer.

This boundary is what allows the system to be both adaptive and correct. The agent can learn without becoming the source of truth. And that distinction is exactly what breaks down in most memory-augmented agents.

From idea to system: what MeowMem0 is made of

At a high level, MeowMem0 is composed of four major parts:

Slack, as the real-world interface

A FastAPI backend, which orchestrates everything

A relational database, which holds reminder state

Mem0, which holds long-term memory

An LLM-based agent sits at the center, but it never directly mutates state. It reasons, decides, and calls tools. All real changes happen in deterministic code, with the model driven by the Claude Agent SDK and a constrained tool surface.

[ARCHITECTURE DIAGRAM PLACEHOLDER: Slack → FastAPI → Agent → DB + Mem0]

This structure might look conservative, but that’s intentional. When you’re building agents that deal with time, notifications, and trust, boring architecture is a feature.

Part II: The engineering section, how the system actually works

This is the part where we get concrete. If you want to build something like MeowMem0, this section is the blueprint.

Slack ingestion: assuming the world is unreliable

Slack delivers events at least once. That means duplicate messages are not a corner case; they are expected behavior.

The backend exposes three HTTP endpoints:

POST /slack/events for Event API messages

POST /slack/commands for slash commands

POST /slack/interactions for button clicks

Every incoming request is authenticated using Slack’s signing secret to prevent replay attacks. Before any business logic runs, events are deduplicated using a short-TTL cache keyed by Slack’s event ID.

If you skip deduplication, you will eventually double-create reminders in production.

[CODE PLACEHOLDER: Slack signature verification + event deduplication logic]

Slack-specific formatting, mentions, channel tags, markup, is stripped away immediately. The agent only ever sees clean text. This dramatically simplifies intent handling and reduces prompt noise.

The agent loop: deterministic first, generative second

Once a message is normalized, it enters a single orchestration loop.

Before calling the model, the system checks for pending multi-turn actions. These include cases like:

A reminder was created without a time

The system proposed a default time and is waiting for confirmation

The user said “reschedule that” and multiple reminders match

Short replies such as “yes,” “no,” or “the second one” are resolved using lightweight heuristics rather than another LLM call. This keeps flows predictable and avoids unnecessary inference costs.

Only when the system has enough information to act does it invoke the model.

This ordering is deliberate. Reminder systems fail when generative reasoning is allowed to override incomplete state.

Memory prefetching: when not to remember

One of the most subtle performance and quality issues in memory-augmented agents is over-fetching.

MeowMem0 explicitly decides whether memory is relevant for a given intent. Listing reminders usually doesn’t need personalization. Creating or disambiguating one often does.

When memory is needed, the system fetches targeted memories from Mem0, preferences and behavior summaries, then caches the result with a strict TTL to keep latency low.

[SEQUENCE DIAGRAM PLACEHOLDER: Memory prefetch decision flow]

This prevents irrelevant long-term context from leaking into reasoning and keeps the prompt focused.

The system prompt: enforcing a hard contract

The system prompt is not creative. It is contractual.

It tells the model, explicitly:

The database is the source of truth for reminders

Mem0 provides personalization only

The model must never invent or assume reminder state

All state changes must happen via tools

Claude is given a narrow toolset: create, update, snooze, list, mark done. When it decides an action is required, it emits a structured tool call. No state is mutated inside the prompt.

[CODE PLACEHOLDER: Tool definitions and example tool invocation]

This design makes the system auditable, testable, and resilient to model drift.

Claude Agent integration and tool contract

We integrate the Claude Agent SDK as the orchestration layer, but we keep its surface area narrow. The agent does not mutate state directly. It reasons, selects a tool, and returns structured inputs. Deterministic code executes the change, logs it, and syncs memory. This separation is what keeps the system trustworthy when the model inevitably makes mistakes.

The toolset is intentionally small and typed. Each tool maps to a concrete function in agent-backend/main.py, with the database as source of truth and Mem0 as long-term context. If you remove this tool boundary, you lose auditability and open the door to hallucinated updates.

| Tool | What it does |

|---|---|

| create_reminder | Create a reminder with natural-language time parsing and category inference. |

| update_reminder | Change title, description, or due time; marks reschedules for behavior tracking. |

| mark_done | Complete a reminder and move its memory to the archived category. |

| snooze_reminder | Push a reminder forward and record the snooze interval. |

| list_reminders | Return DB-backed lists (active, completed, all) with stable formatting. |

| search_reminders | Search reminders by title/description in the database. |

| delete_reminder | Delete a reminder only when the user explicitly asks. |

| set_preference | Write long-term preferences into Mem0 memory. |

| get_preferences | Read preferences from Mem0 and return them to the agent. |

| list_rescheduled_reminders | Surface reminders with reschedule history from Mem0 or DB. |

| clarify_reminder | Ask the user to disambiguate when multiple reminders match. |

[CODE PLACEHOLDER: Claude Agent SDK call + tool schema wiring]

Prompt building: truth first, memory second

The prompt is assembled from two sources with different guarantees. First, we load the current reminder state from the database and render it directly into the system prompt. This makes the model’s view of reality deterministic: it can’t hallucinate or override actual reminder status. Second, we enrich with Mem0 signals, preferences and behavior summaries, so the agent can adapt without drifting. We intentionally keep that memory slice small and targeted.

The result is a prompt that feels personalized but stays grounded. It’s enough to suggest a default time or interpret a user’s pattern, but not enough to blur the boundary between memory and state.

[CODE PLACEHOLDER: system prompt assembly showing DB reminders + Mem0 memory context]

Reminder creation and the missing-time problem

Users frequently omit times: “tomorrow,” “later,” “in the evening.”

Guessing is dangerous. Asking repeatedly is annoying.

MeowMem0 resolves this by combining inference with confirmation. If no time is supplied, the system infers a category and looks up the user’s most common confirmed times for that category from behavior memory. It proposes a default and asks for explicit confirmation before scheduling.

This preserves user trust while reducing friction.

[SCREENSHOT PLACEHOLDER: Time confirmation flow in Slack]

The database: intentionally boring

All reminders live in a relational database (SQLite locally, Supabase in production). The schema tracks reminders, statuses, audit logs, behavior stats, and a short conversation window.

[DIAGRAM PLACEHOLDER: Database schema overview]

The database is what makes the system reliable. If Mem0 is unavailable, reminders still fire. If the model misbehaves, state remains correct.

A useful rule of thumb emerged during development:

If losing the data would break correctness, it belongs in the database.

If losing it would only reduce personalization, it belongs in memory.

Mem0: long-term memory without drift

Mem0 stores long-term signals and a mirrored reminder history using explicit categories:

Active reminders

Archived reminders

User preferences

Behavior summaries

Optional conversation memory

When a reminder is marked done, its active memory is removed and an archived memory is written instead. This avoids stale memory influencing future behavior while keeping history searchable.

The mirror is never treated as authoritative state. It’s there to improve recall and personalization, not to decide what should fire.

Behavior is summarized rather than logged raw. The model sees patterns, not noise.

This is how MeowMem0 learns over time without accumulating contradictions.

Notifications and archiving: separating time from conversation

Reminder delivery runs independently from the agent loop. A background process checks for due reminders and sends Slack notifications with interactive controls.

Each reminder tracks its last notification timestamp to avoid duplicate pings. Overdue reminders are archived via an external cron endpoint, which keeps scheduling reliable even if the app instance sleeps or restarts.

[DIAGRAM PLACEHOLDER: Notification + archival flow]

Slack app integration: wiring the bot into a workspace

The backend endpoints are only half the story. To make the bot real, you register a Slack app and point it to your public URLs. This is done in the Slack app dashboard at api.slack.com/apps. Once the app is created, you enable Events, Commands, and Interactivity and paste in the endpoints your FastAPI service exposes.

For MeowMemo, the app expects /slack/events for event callbacks, /slack/commands for slash commands, and /slack/interactions for button actions. The signing secret and bot token are stored in the backend environment so the service can verify requests and post responses back into Slack.

[SCREENSHOT PLACEHOLDER: Slack app settings with Events + Interactivity URLs]

What this architecture enables

MeowMem0 is not interesting because it sends reminders. It’s interesting because it demonstrates a pattern for building AI agents that last.

You can have long-term personalization without letting memory become truth.

You can archive without forgetting.

You can adapt behavior without inflating prompts.

You can build assistants that improve over time without becoming unreliable.

This is what memory layers like Mem0 are actually for, not longer context windows, but clean, intentional persistence. The underlying model capabilities are anchored by the Claude platform, but the reliability comes from the contract we enforce around it.

If you’re building agents meant to live beyond a demo, this boundary between state and memory isn’t optional. It’s the difference between something users try once and something they trust every day.